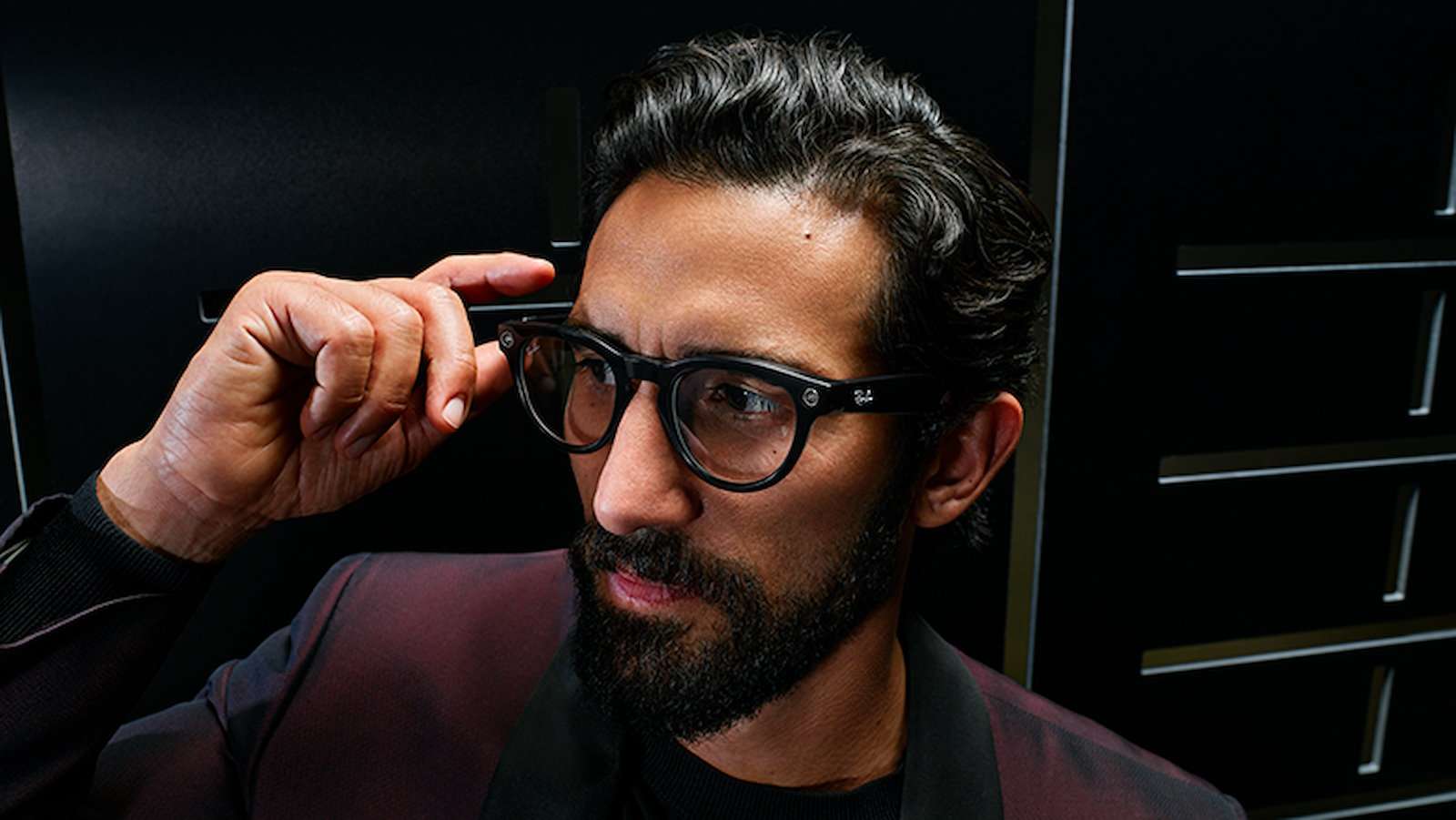

Meta has just announced an update to its Ray-Ban Meta smart glasses, which activate a smart assistant capable of answering questions about the wearer’s surroundings. However, this is a limited access version at the moment.

This will interest you too

[EN VIDÉO] Discover the profession of artificial intelligence engineer!

Last September during his conference MetaConnectFacebook’s parent company has introduced a new generation of Ray-Ban connected glasses. One of the main features is Meta AI. It was announced for 2024, and the Meta has just activated it via Updated for a few users.

These glasses do not have a screen, all interactions are done via voice. It is essentially a voice assistant equipped with a camera and worn on the face. Multimodal AI allows the glasses to understand spoken questions, use the camera to visually analyze what is in front of the user, and then respond verbally.

The feature is only available in the United States

To activate the AI, simply say ” Hi Meta You can then ask him to look, followed by a question (“ Look and… “). On InstagramMark Zuckerberg explained this by asking him about the pants he wears with the shirt he has. The artificial intelligence was also asked to write a caption for the image, identify the fruit, and translate the text. This type of always-on assistant can be a valuable help for visually impaired people in particular. Each time, the AI takes an image with the glasses for analysis, and saves the image and query to the associated smartphone app, creating a history that the user can browse later.

With this new feature, Ray-Ban Meta glasses look a lot like Humane’s AI Pin smart badge. However, the latter completely replaces the smartphone, while the Meta connected glasses only work with their smartphone. Currently, Multimodal AI is in limited-access beta, and is only available in the United States.